Member-only story

Machine Learning Theory Illustrated using Lego (Underfitting vs Overfitting)

Learn the Machine Learning Theory of Underfitting vs Overfitting and why this is an important area within Machine Learning. Underfitting and Overfitting places a key role when choosen a Machine Learning model based on your available dataset. If you’re unsure about some of the concepts presented in this article the content of this article is also available in video format on the Vinsloev Academy YouTube page: https://youtu.be/T9NtOa-IITo

The need for IT professionals is ever growing whether it’s within software engineering, web development, cybersecurity, network administration, the list is almost endless. Discover content from Vinsloev Academy that matches your personal learning goals within the area of IT. — https://vinsloev.com/#/discoveryCenter

Underfitting

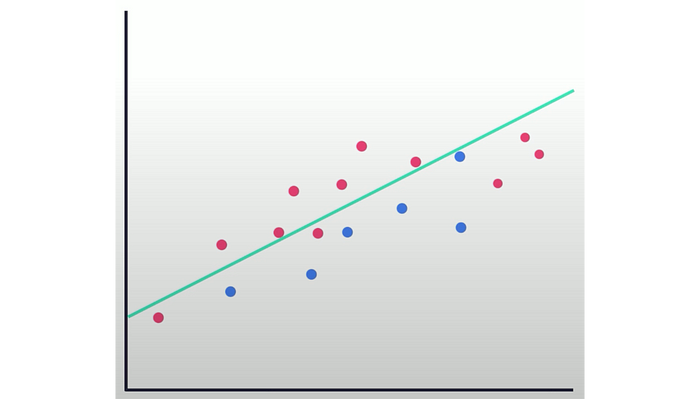

So what is Underfitting? Underfitting at it’s core is when you have a high bias within your dataset. In other words the problem that is to be solved is being oversimplified. This results in your machine learning model not doing well on the training set and thereby not doing well on new / real input data. This is illustrated below, where we try to divide the red dots from the blue dots and we’re doing such using a linear approach.

A line is drawn right through our dataset and on first sight it looks somewhat accurate as we do only have red dot above the green line, but when looking below the line we have an equal amount of blue and red dots. To better understand why choosing a linear model on this particular dataset would be a bad approach, we will investigate the problem from another angel using Legos.

In the illustration above we have made two categories No Lego and Lego, which is equal to our previous Red and Blue dots. However, as we now see the oversimplification of the problem has resulted in our model defining Lego as being only bricks with four studs, but what about a Lego minifigure? That…